Cognitive Agent: Solving 12x More Visual Puzzles Than GPT-4o

1. Project Overview

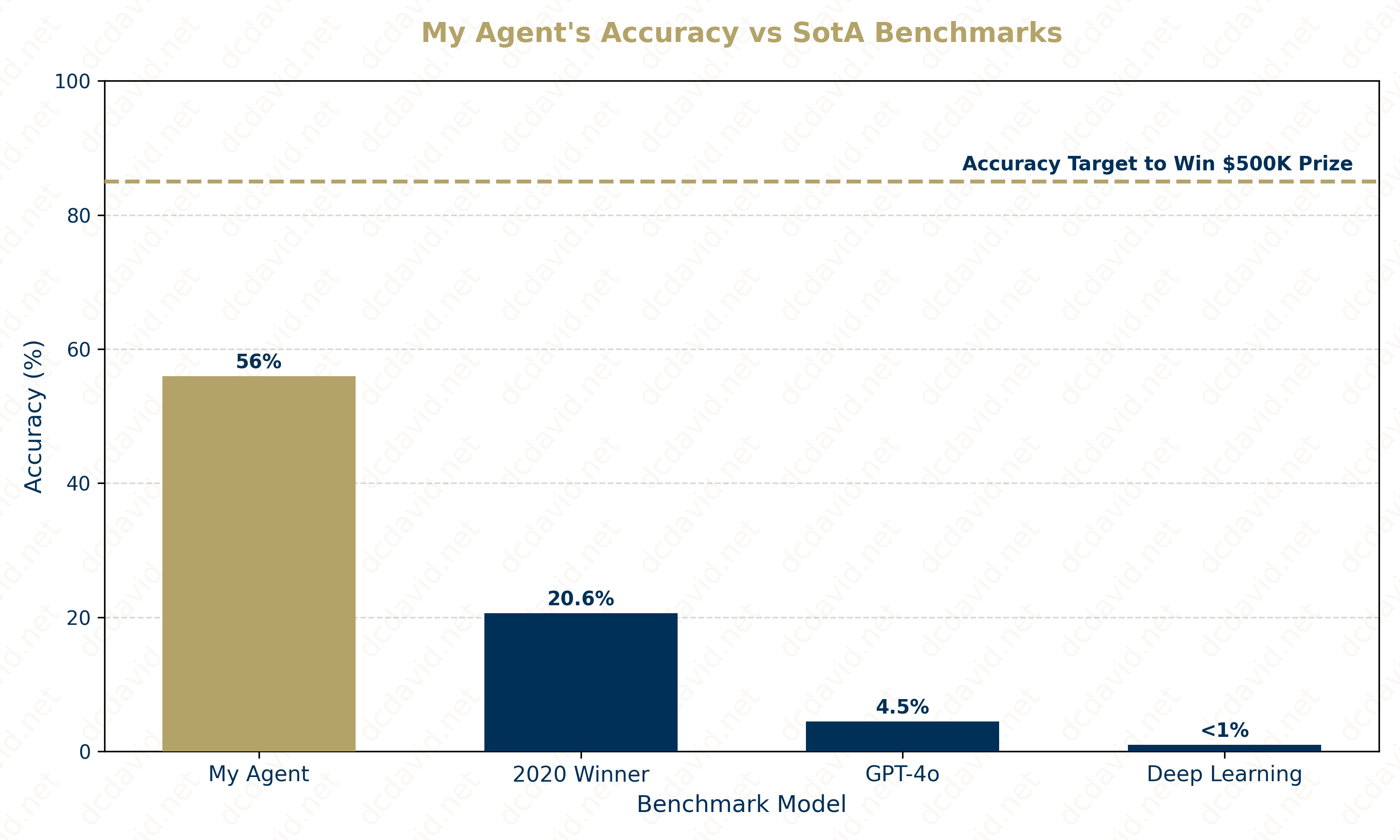

The Cognitive Agent, powered by a Production System with vectorized perception, solves abstract reasoning puzzles from the ARC-AGI benchmark. The agent achieved 56% accuracy (54/96 tasks), significantly outperforming the 2020 Challenge winner (20.6%) and dominating modern Large Language Models like GPT-4o (4.5%). Outperforming these benchmarks demonstrates that knowledge representation is required to generalize novel transformation rules from minimal data, which is one key advantage of Symbolic Reasoning (traditional AI) over Deep Learning (modern AI).

2. Context & Problem Statement

- The Problem: The objective was to solve the ARC-AGI benchmark, an intelligence test designed to require inferring abstract transformation rules (e.g., rotation, intersection) from just 3-4 examples and generalize them to a novel test case. This required implementing a Symbolic Production System to learn semantics where modern Deep Learning models typically fail due to their reliance on memorizing massive datasets.

- The Challenge of Generalization:

- The Theoretical Barrier: Analogical transfer requires identifying Deep Similarity (relationships between objects) rather than just Shallow Similarity (visual features like pixel color). Deep Learning models often fail on ARC because they rely on statistical correlations of shallow features, whereas ARC requires mapping deeper, abstract relationships (e.g., "inside of," "aligned with") across visually dissimilar examples.

- The Benchmark: There is a big barrier to entry, even for State-of-the-Art (SOTA) models. For example, Deep Learning approaches typically score <1%, and massive models like GPT-4o only achieve 4.5% on the original benchmark.

- The Goal: My agent had to prove that a Symbolic Production System could bridge this generalization gap, specifically by demonstrating that explicit logic (operating on semantic objects rather than pixels) allows for rule inference in scenarios where statistical pattern matching fails.

- Academic Angle: This project implements Visuospatial Reasoning. As defined in the Knowledge-Based AI (KBAI) course, this involves reasoning where "Visual deals with the what part" (identifying objects) and "Spatial deals with the where part" (relationships). For example, imagine a picture in which there is a sun above a Field. The what part is the Sun and the Field, and the where part is that the Sun object is above the Field object.

3. Methodology & Tech Stack

- Architecture: To gain Visuospatial Reasoning, the agent is architected to have Creativity using a Production System. The course literature defines this as a cognitive architecture where knowledge is represented as rules (Condition-Action pairs). My agent implements this with a hierarchy of decisions:

- IF (

condition_met): The agent perceives a specific visual pattern (e.g.,is_a_rotation). - THEN (

action_to_take): The agent triggers a corresponding transformation (e.g.,do_rotation).

- IF (

- Design Decision (Procedural vs. Episodic): I prioritized Procedural Knowledge (knowledge of how to perform actions) over Episodic Memory (storage of specific past experiences). While cognitive architectures like SOAR integrate both, I constrained the agent to Procedural Knowledge to ensure deterministic behavior and linear runtime scaling (\(O(N)\)).

- Tech Stack:

- Language: Python

- Core Libraries: NumPy for high-performance matrix manipulation

4. Implementation & Key Features

- Feature 1: Solving the slowness of Pixel-level scanning: Iterating through pixels has a time complexity of \(O(N^2)\), and possibly a worse time complexity if the implementation were suboptimal.

- Solution: I utilized NumPy's vectorized operations. This allowed the agent to percieve problems at the object-level, enabling it to recognize shapes, lines, and blocks as single entities in linear time.

- Feature 2: Solving Combinatorial Explosion: A naive Production System for 96 distinct problems risks having an unmanageable number of convoluted rules and various transformations.

- Solution: I implemented a modular library of "heuristic primitives" (e.g.,

is_a_rotation,is_connect_the_dots). These primitives were reusable across different problem types, allowing the agent to solve new problems by combining existing primitives rather than requiring unique hard-coded rules for every task.

- Solution: I implemented a modular library of "heuristic primitives" (e.g.,

5. Results & Impact

- Quantifiable Metrics: The agent correctly solved 54 out of 96 evaluation problems (56% Accuracy).

- Impact:

- Grading Threshold: This score significantly exceeded the course requirement for maximum credit (100/100) in the four milestones for this semester-long project.

- SOTA Comparison: The agent outperforms the winner of the Kaggle 2020 ARC Challenge (20.6%) and dominates modern SOTA AI models like GPT-4o, which scores only 4.5% on ARC without external tools.

- Visuals:

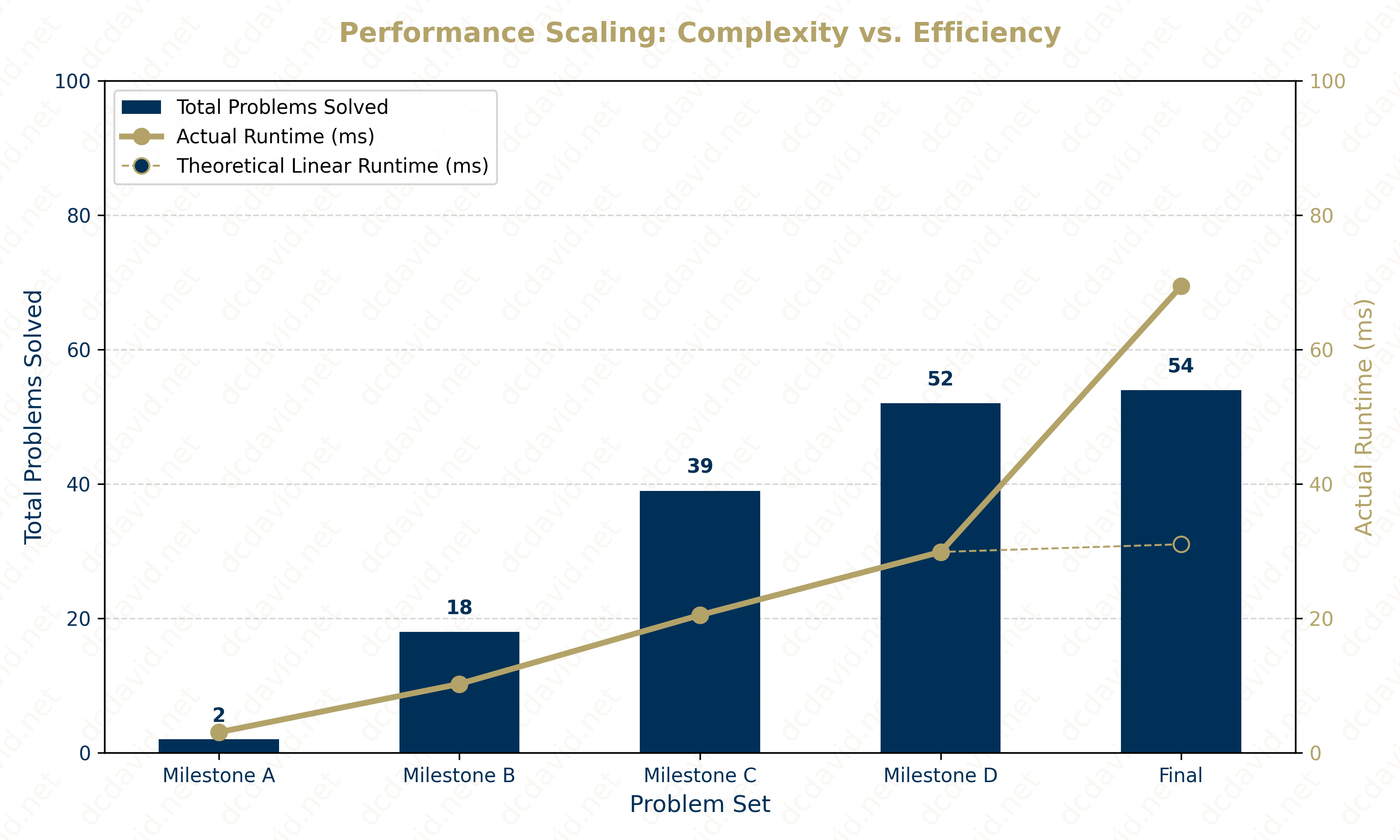

The dual-axis chart below visualizes the relationship between the agent's problem-solving capacity (Navy Bars) and its computational cost (Gold Line).

The runtime trend follows the problem count linearly rather than exponentially for Milestones A through D. This confirms that the vectorized Production System successfully avoided the \(O(N^2)\) complexity challenges that might be seen in a pixel-based reasoning approach.

It is important to note that there is a significant uptick in runtime between the Milestone D and Final, where a 3.6% increase in problems solved resulted in a 132.39% increase in runtime. The root cause was that one of the two extra problems solved for Final involves doing \(O(N)\) linear operations \(N\) times. This particular problem can only be implemented at \(O(N^2)\) because every operation had variability that required line-by-line operation rather than operating on the two-dimensional array. This one problem at \(O(N^2)\) then skews the average time complexity from \(O(N)\) to closer to \(O(N^2)\).

6. Challenges & Limitations

- Success: Analogical Reasoning: The agent excels at Analogical Reasoning (the ability to infer "A is to B as C is to D"). It successfully identifies the relationship between the Training Input (A) and Training Output (B) and transfers that relationship to the Test Input (C) to generate the Test Output (D).

- Limitation: Re-representation: The primary limitation is Problem Re-representation. The agent operates on a fixed set of visual primitives. If a problem requires a novel representation to be solvable, the agent cannot dynamically "reformulate" the problem space.

- Failure Example (Enclosed Objects): The agent failed on tasks requiring it to identify objects "trapped" inside a bigger shape. Since the

is_enclosedprimitive was not strictly defined in the agent's Semantic Knowledge, it could not "see" these objects.

- Failure Example (Enclosed Objects): The agent failed on tasks requiring it to identify objects "trapped" inside a bigger shape. Since the

- Future Work: A next-generation agent would expand on the agent's Creativity. According to Ashok Goel Ph.D., an agent that is capable of generalizing through Analogical Reasoning is said to be creative because the agent is able to solve something novel that it has stricly not trained for before. However, another indicator of Creativity is the ability to do Re-representation. This would allow the agent to first go through its Production System to see if any of its rules match the problem; if not, it can construct a new representation of the problem using the rules and transformations in its Procedural Knowledge.

7. Course Reflection

- Synthesis: This project served as a practical implementation of the Deliberative Agent architecture that had an Input, a Deliberation (Learning, Reasoning, and Memory), and an Output. It demonstrated that "Intelligence is a function that maps perceptual history into action."

- Algorithm Design (A* vs. Heuristics): In an earlier project, I implemented an A* Search algorithm to solve planning problems. Comparing that experience to the ARC-AGI project highlighted the trade-off between Search (guaranteed optimality but high compute) and Heuristic Rules (fast but brittle). While A* was perfect for the defined state-space of Block World, the infinite state-space of ARC required the faster, heuristic-driven Production System approach.

- Key Takeaway: There is an old saying in AI by the pioneer Marvin Minsky: "In AI, if you have the right knowledge representation, problem solving becomes very easy." By representing the ARC grids not as raw bitmaps but as semantic objects (Shapes, Lines, Backgrounds), I transformed an intractable search problem into a solvable rule-matching problem.

8. Interactive Challenge: Can You Solve This?

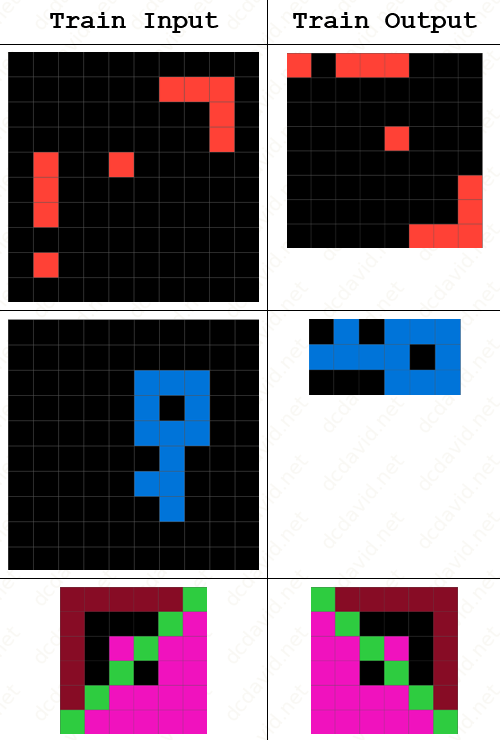

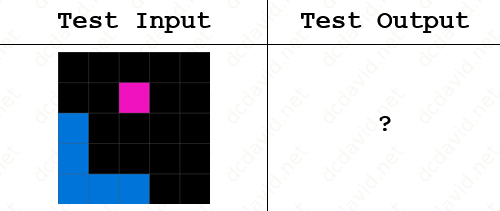

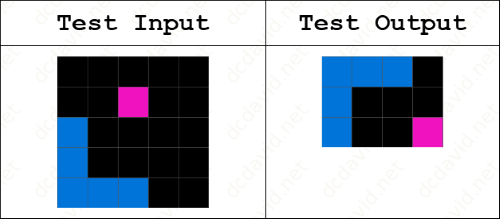

To understand the complexity of the ARC-AGI benchmark, try solving this sample problem yourself. This example was generated by myself to demonstrate the type of abstract reasoning required without using protected dataset materials in the KBAI course nor the ARC-AGI competition.

The Challenge:

-

Analyze the Training Pairs: Look at the three examples below. How does the grid on the left transform into the grid on the right?

-

Infer the Rule: Is it a color change? A movement? A logical operation like XOR?

-

Apply to Test: Apply that same rule to the unseen "Testing Input".

The Solution:

Humans can intuitively see the two transformations of this image. The first is to rotate the image 90 degrees clockwise. The second is to trim the all-black columns and all-black rows around the object.

To solve this without hard-coded instructions, my agent had to autonomously:

-

Perceive: Recognize distinct objects (i.e., Islands of Red Squares, Blue Key, Box with 4 Colors).

-

Reason: Detect the spatial relationship changes between Input and Output (i.e., the Input is rotated 90 degrees clockwise, and then the all-black columns and rows outside of the object is removed in order to get the Output).

-

Generalize: Confirm this rule holds true across all training examples, rejecting incorrect hypotheses.

-

Execute: Apply the validated transformation to the Test Input below to generate the final prediction.

-

Maintain Efficiency: The agent must perform this entire reasoning loop for 96 different problems (each with unique rules, transformations, grid sizes, and pixel configuration) while maintaining an average runtime of under 70ms per problem.

-

Solve the Problem:

9. Course Review

-

KBAI: Overall, the subject matter is a refreshing change of pace in a world where AI is thought to be 90% about GenAI/LLMs and 9% about Deep Learning and (a quite generous overestimation of) 1% about "Traditional AI" or Symbolic AI. Knowledge-Based AI as a course has great teaching assistants, great lectures, and engagingly diverse assignments. Fall and Spring semesters are 17-weeks long. However, I took this class in the shortened Summer semester (12-weeks). It took me an average of about 22 hours per week to juggle peer reviewing 9 papers; 2 lectures; 1 homework + 1 milestone or 1 mini-project. The peer reviews enable engagement with other students' ideas in the class, and the milestones keep the students accountable for the semester-long ARC-AGI project.

-

Advice for other students: This course is one of the MSCS courses that allow working ahead of the course schedule. Front-loading the peer reviews (i.e., reviewing 9 papers instead of the minimum of 5) was particularly effective in lightening the load around the middle of the semester when the work volume of the course got denser and the projects got tougher. Specifically, Weeks 4, 5, and 6 of the summer KBAI semester was particularly heavy. This stretch of the semester involved the following:

- 6 lectures totalling about 5 hours

- Block World Mini-Project 2, which is the hardest project in the class that necessitates A* Search for full credit

- ARC-AGI Milestone B, which is the first significant milestone requiring 12 solved problems

- Homework 2 (Concept Learning)

- Mini-Project 3 (Natural Language Processing)

- midterm Exam 1

- reviewing a minimum of 15 papers for full participation credit